To get everyone up to speed, the trolley problem is a thought experiment typically involving a runaway trolley heading toward five people tied to a track. Should you continue along your path or divert the trolley onto another track, where it would only harm one person? The dilemma centers on the moral choice of sacrificing one life to save many. Other versions vary our involvement, e.g., being a bystander versus actively steering the trolley.

For the curious, the trolley problem explores the ethical dilemmas of consequentialism (focusing on the outcomes of actions) and deontology (emphasizing adherence to moral rules).

The current generation of commercial autonomous vehicles (AV) are considered Society of Automotive Engineer’s level 3 - The vehicle can handle certain driving tasks under specific conditions without driver intervention, but the driver can disengage these controls and must be ready to take. For context, airplanes are more autonomous; they can fly and land themselves, but we maintain human oversight in the form of a pilot.

It is estimated that 94% of car accidents involve human error, so there is room for improvement. AVs do have accidents but to a lesser degree. Ironically, our choosing more autonomous vehicles is a variation of the trolley problem – do we want to trade the AV “associated” deaths for the far greater human “associated” ones? But I digress. The question is whether the trolley problem is the teaching case or whether more mundane and common driving scenarios can teach an artificially intelligent vehicle what to do.

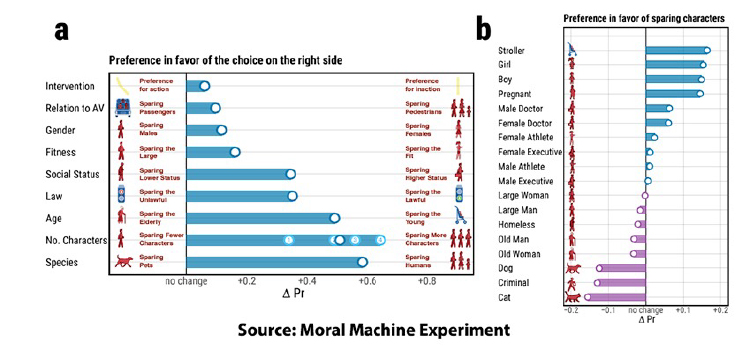

A large online study conducted by the MIT Media Lab, the Moral Machine Experiment, and reported in Nature considered thirteen traffic scenarios by over 3 million global participants. In these scenarios, all at their core variations of the trolley problem, the most decisive factors

“were human beings (vs. animals), the number of lives spared, age, compliance with the law, social status, and physical fitness.”

The current researchers, while acknowledging these findings, argued

“the trolley case is an inadequate experimental paradigm for informing AVs’ ethical settings because it is reductively binary and lacks ecological validity.”

Ecological validity refers to the fact that the trolley problem is rare, if it occurs at all, making the findings of the Moral Machine less generalizable to real-world situations. The binary part refers to the two underlying ethical considerations: outcomes (consequentialism) and violating ethical norms (deontology). The researchers rightly point out that human decisions often involve many other concerns. For example, we might consider the character of the individuals involved. You can see a variation on this consideration in the Moral Machine data describing social status. And, of course, we exhibit other biases, including towards gender, age, and species.

The researchers offer a different training model, the ADC model. It considers the character or intent of the agent (A), their actions or deeds (D), and the subsequent consequences (C). When all three features align, moral judgment is straightforward. The thoughtful surgeon, taking on a high-risk case with a good outcome – morally good; the “cowboy” surgeon, taking on a patient with little hope and ending in a prolonged death – morally bad. The ethical dilemmas lie when the three features do not align. How these three components interact is unknown and reflects the experiments the researchers propose.

To explore more mundane vehicle scenarios, e.g., rushing to get your child to school on time or pulling over and slowing for emergency vehicles, the researchers developed virtual reality scenarios that systematically varied the intentions, deeds, and consequences. They have completed a proof of concept study but have not published their data, anticipating a larger investigation.

They suggest that implementing the D and C of their model is straightforward. Deeds “complying with traffic norms,” i.e., not running a red light or pulling over for an ambulance, are good; running a stop sign is bad. Similarly, consequences may be judged by whether or not an accident occurred or, in the case of an accident, the degree of harm.

That leaves great uncertainty in determining the character or intent of the individual behind the wheel. Of course, we would all like to regard ourselves as moral drivers. To paraphrase George Carlin, "Ever notice that anybody driving slower than you is an idiot, and anyone going faster than you is a maniac?" We might program our vehicle to drive safely compared to, say, quickly in much the same way we can now choose sporty or comfortable driving. Or our cars can access our driving record or do an independent evaluation of our driving behavior. Those telematic devices are already deployed. Allstate’s “Drivewise” system continuously monitors your driving and can offer discounts for safe driving when insurance is renewed.

Autonomous cars have an additional problem: limited time and maneuverability. Cars, unlike airplanes, can only move to the left or right; planes have another dimension, they can change altitude. This enhanced dimensionality for planes gives them more time to react and respond; cars not so much. This increases the scenarios where the only choices are “unfavorable.”

Finally, the researchers point out that the main factors contributing to trust between you and your vehicle are “respect for privacy in the data collection necessary for machine learning, cybersecurity, and clear accountability.” As I mentioned, we already freely provide our data to insurance companies offering us a discount. And installing “black box recorders” in our cars that could only be directly accessed after an accident might prove helpful. How we can maintain sufficient cybersecurity of our vehicles is unknown. Do not worry; attorneys are already on the case, as is our Department of Defense. There are legal precedents for accountability, primarily with the use of autonomous controls in aircraft. Those outcomes do not find the autonomous systems or their developers negligent; liability falls to humans using these tools. For autonomous cars, litigations have resulted in settlements with non-disclosure agreements.

Source: Moral judgment in realistic traffic scenarios: Moving beyond the trolley paradigm for ethics of autonomous vehicles AI and Society DOI: 10.1007/s00146-023-01813-y”