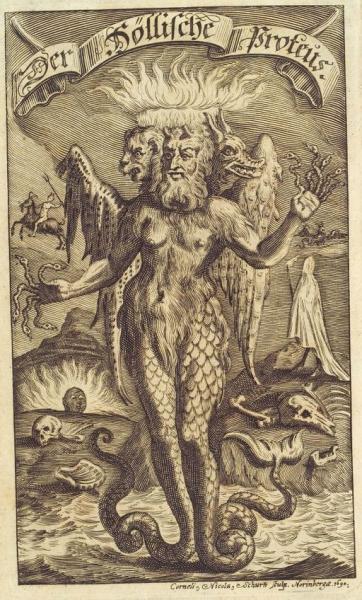

Proteus, the shape-shifting god of the Greeks, has come to mean malleable or changeable. John Ioannidis was perhaps the first researcher to apply the term to the varying results of scientific studies where early studies were often both contradictory and had results that retrospectively seemed too good (or bad). While his original work was on genetic association studies and health care interventions a group at Mayo has extended his observation to the treatment of chronic medical conditions.

The study, a meta-analysis, identified meta-analysis papers on random control trials (RCT) for chronic conditions treated with medication or devices reported in 10 high-impact journals from 2008-2015. The outcomes of the reviewed RCTs had to include dichotomous outcomes and were restricted to studies of treatment rather than diagnosis or prognosis. Observational studies and studies of behavioral change were also excluded. Of the nearly 2700 studies identified, 70 met the search criteria and formed the basis on their report. The 70 meta-analysis reports included 930 RCTs.

The Proteus effect, those extremes in early reports, were defined as studies in which the largest effect or greatest heterogeneity was noted in the first or second reports. Heterogeneity describes the variation in outcomes between studies; statistically it “measures” the inconsistency of results not due to chance.

- 3.12% of all RCTs were identified as demonstrating the Proteus effect.

- 37.14% of all meta-analysis used these studies.

- The effect size, merely the difference in outcome irrespective of sample size, was 2.67 times larger for these early studies compared to the pooled values of the meta-analysis.

- The majority of studies with the Proteus effect evaluated medications rather than procedures

- The chronic conditions demonstrating the Proteus effect included diabetes, chronic obstructive pulmonary disease, heart disease, lung cancer and chronic kidney disease.

Having reproduced evidence of the effect, the question is why it occurs. The researchers looked at the usual suspects for such significant disparity, sample size, number of events, study duration and follow-up, publication bias, study setting, and sites. None of them were significantly associated with the effect. We are left only with conjecture. To be inclusive we must consider the possibility that the findings are due to chance, but three of the author's possible explanations are worth mentioning:

- A shift from efficacy evaluations (does it work) to effectiveness evaluations (how well does it work) over time

- That earlier trials were more restrictive in patient selection (selecting for those at higher risk) than later trials

- Earlier studies were more compulsive in applying the intervention, perhaps because of more resources than later studies.

Clinicians, not engaged in research, know that “prior results do not guarantee a similar outcome.” The study shows that this is not simply a lawyerly disclaimer. In the accompanying editorial, they suggest that early studies should prompt further, dare I say, real world studies duplicating the results, rather than “significant practice change.” It should remind us how easy it is to say evidence-based practice and how hard it is to achieve.

Source: Treatment Effect in Earlier Trials Of Patients with Chronic Medical Conditions: A Meta-epidemiologic Study Mayo Clinic Proceedings DOI: 10.1016/j.mayocp.2017.10.020