Some scares never go away no matter whether there is a remotely plausible basis for the scare (maybe asbestos in talc) or pure insanity (vaccines causing autism).

Today's scare (and hardly a day goes by without one) is in the first category. It is known that many nitrosamines are carcinogenic. It is also known that nitrites react with certain amines and form nitrosamines. So, it is at least reasonable to wonder whether nitrite-preserved meats might pose a cancer risk (1).

Reasonable, maybe, but a real risk?

A recent paper that supposedly shows that nitrite-preserved meat gives you cancer. "A Review of the In Vivo Evidence Investigating the Role of Nitrite Exposure from Processed Meat Consumption in the Development of Colorectal Cancer" was published last month in the journal Nutrients, and it's tough to swallow. The reason: it's a meta-analysis of observational studies (2). A meta-analysis is a study of other studies in the literature. Its outcome can be predetermined by which studies are included and which are excluded.

An observational study is (maybe) even worse. Huge databases full of data from old studies are mined in an attempt to find an association of two variables, in this case, nitrite-preserved meat and different cancers. Like meta-analyses, observational studies can also be subjective. The results can be influenced by the choice of variables. For example, some shlub searches a bunch of databases and sees an association (3) between beer consumption and pancreatic cancer in men aged 45-65, so the shlub publishes a paper in a shlub journal with this claim. But does it really mean anything? Often the answer is no. Here's why.

Perhaps, left unreported is that if the age range considered is 45-75 or 55-80, or if women are included, the math no longer works and the effect vanishes. This tactic is called selection bias and most, if not all, of the time it means that the effect is illusionary, not real. This is academic dishonesty at its finest.

So, what happens when someone publishes a meta-analysis of observational studies? Let's ask ACSH advisor, biostatistician, and all-around good guy Dr. Stan Young about this:

Meta-analysts who use observational studies are easy to judge; claims from epidemiology studies fail to replicate over 90% of the time so garbage in, garbage out.

Stan Young, Ph.D. Private communication, 12/23/19

A little more about the paper...

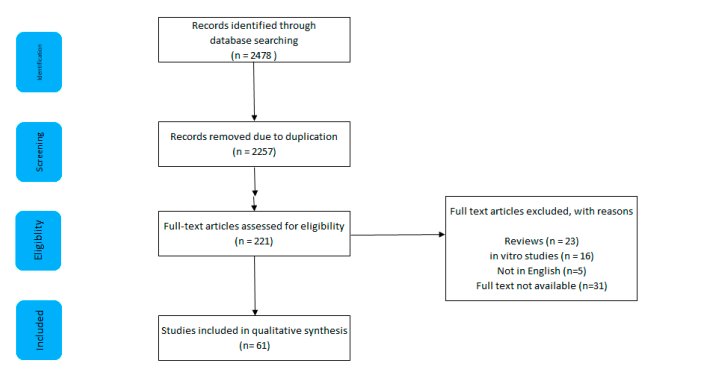

The inclusion criteria for the Nutrition paper looks more or less like most of them:

Search strategy and results, including reasons for exclusion. The authors started with 2,478 studies, chopped off 90% of them due to duplication (that sounds like a lot), paring the number down to 221. This was further reduced to include 61 studies of the original 2,478. That's not so bad. It is not uncommon to see thousands of studies pared down to less than 10 for reasons that may or may not make sense. It is easy to see how the exclusion criteria for meta-analyses can give a predetermined answer. Not such good science, is it?

Without going into too much detail (I'm not in the mood to kill myself tonight - yet) here are some of the conclusions from the Nutrition paper:

- In 28 prospective studies that examined the relationship between processed meat 16 showed no significant increase in colon cancer in people who ate processed meat. Eleven showed a significant increase. One of them was impossible to tell.

- In 37 retrospective studies, 22 showed significant increases in CRC, 14 did not, and one showed a significant decrease in CRC.

I'm going to stop right here, even though I've covered maybe 10% of the paper. The rest consists of a whole bunch of animal data, lots of speculation including dozens of variables in quantities and types of meat, and potential mechanisms of nitrosamine formation under different sets of conditions. Some of it may be legitimate, but it is irrelevant here. The premise of this study – that meta-analyses of retrospective studies can in any way prove anything is all you really need to know. And these data don't even support the hypothesis, which can be seen by the small effect seen. The rest is window dressing.

And there are lots of windows here.

NOTES:

(1) Most dietary nitrates/nitrites come from vegetables, not meat. And the amount in vegetables is swamped by the amount we produce in our own saliva. See: NPR Doesn't Know Spit About Saliva, Nitrates Or Deli Meat

(2) Observational studies are also called retrospective studies. They examine data from studies that have already occurred and have no fixed endpoint. By contrast, prospective studies involve a preestablished endpoint, and measure what happens after a given treatment or diet, etc begins. Prospective studies are infinitely better than those that are retrospective.

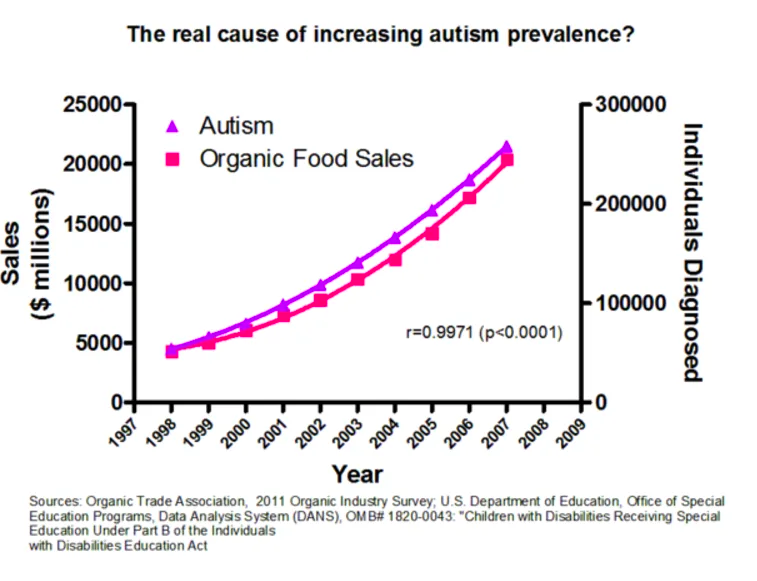

(2) "Associated with," "associated with," and "linked to" are tricky terms that are commonly used in epidemiological papers. They tell us nothing about cause and effect. Sales of organic food are clearly "correlated with" an increase in autism, but these two cannot possibly have anything to do with each other. When enough pairs of variables are examined there will be freaky outcomes like this now and then, but only by chance.