“We should stop training radiologists now. It’s just completely obvious that within five years, deep learning is going to do better than radiologists.”

- Geoffrey Hinton, Ph.D., 2016 [1]

Some of the most significant advances in the use of artificial intelligence in medicine have come in radiology which provides computers with millions of images in the digital format they understand for learning. Radiology seems to be perpetually on the cusp of being disrupted by these advanced systems. As a real-world measure of progress, researchers had an AI system trained and commercially available for diagnostic radiology “sit” for one portion of the British radiology boards – required for acting as an independent radiologist after their training.

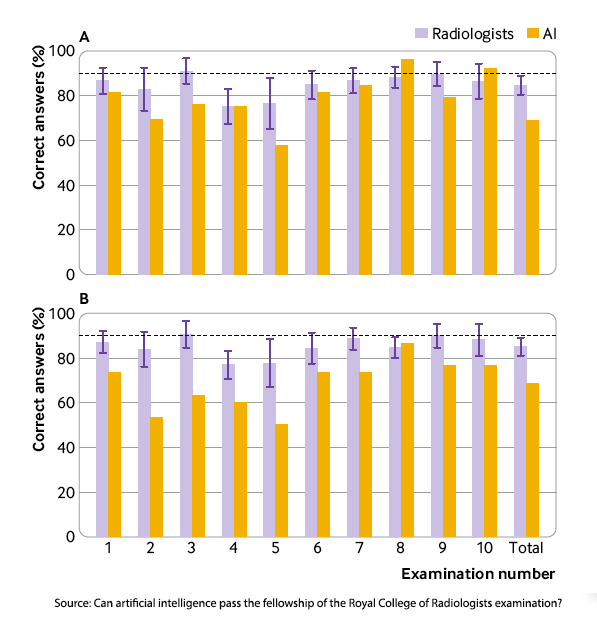

Board examinations usually involve written and practical components. In this instance, the AI program and ten recent successful human test takers were asked to interpret 30 diagnostic images correctly in 35 minutes over 10 separate sessions. This “rapid reporting” session was meant to simulate actual-world conditions where radiologists are given little clinical information and asked to make quick judgments as more and more images come in from offices and emergency departments. About half of the images are normal, with no pathologic findings; the remainder have a single pathologic component. To pass this section of the board examinations, you must successfully diagnose 27 of the 30 studies, 90%.

The test images came from a training set used by one of the authors in preparing radiology residents for the examination. They were felt to be a relatively accurate reflection of the images used by the board examiners. [2] The ten humans were all recent graduates who had successfully passed their board examinations. The AI program was a commercially available “medium risk” medical device trained to detect commonly seen abnormalities in the chest and musculoskeletal systems. [3]

The testing consisted of ten 35-minute sessions involving 30 images - 300 total responses. The “rapid reporting” session covers all of diagnostic radiology so that the researchers reported on how well the AI program fared in the areas in which it had “trained,” as well as those additional areas required of their human counterparts. They also sought to emulate the behavior of exam takers when faced with an image they could  not interpret, marking all responses as normal or abnormal. For our purposes, we will concentrate on how AI did in the area it was trained.

not interpret, marking all responses as normal or abnormal. For our purposes, we will concentrate on how AI did in the area it was trained.

The results in graph A are from images the AI program was trained upon; graph B included unfamiliar pathology for the AI program. Lots to unpack here. First, the obvious, with two exceptions, humans did better than the AI on diagnosis where both had been trained; when unfamiliar pathology was introduced, AI failed across the board. Second, while the humans fared better, theirs was not a stellar performance. On average, newly minted radiologists passed 4 of the ten examinations.

“The artificial intelligence candidate … outperformed three radiologists who passed only one mock examination (the artificial intelligence candidate passed two). Nevertheless, the artificial intelligence candidate would still need further training to achieve the same level of performance and skill of an average recently FRCR qualified radiologist, particularly in the identification of subtle musculoskeletal abnormalities.”

The abilities of an AI radiology program remain brittle, unable to extend outside their training set, and as evidenced by this testing, not ready for independent work. All of this speaks to a point Dr. Hinton made in a less hyperbolic moment,

“[AI in the future is] going to know a lot about what you’re probably going to want to do and how to do it, and it’s going to be very helpful. But it’s not going to replace you.”

We would serve our purposes better by seeing AI diagnostics as a part of our workflow, a second set of eyes on the problem, or in this case, an image. Interestingly, in this study, the researchers asked the radiologists how they thought the AI program would do; they overestimated AI, expecting it to do better than humans in 3 examinations. That suggests a bit of bias, unconscious or not, to trust the AI over themselves. Hopefully, experience and identifying the weakness of AI radiology will hone that expectation.

[1] Dr. Hinton is considered a “Nobel laureate” as a recipient of the Turing Award, academic computing's highest honor, and one of the seminal figures in developing AI models and systems. “Hinton typically declines to make predictions more than five years into the future, noting that exponential progress makes the uncertainty too great.” Perhaps this was one time he should have declined.

[2] Retrospectively, based on the results, the questions were a bit harder than the actual examination. The board examiners declined to provide the researchers with old images fearing it would hamper the integrity of the testing process. Parenthetically, in my younger academic days, researching the surgical boards to understand how they framed their questions, I met the same resistance.

[3] More specifically, “fracture, pleural effusion, lung opacification, joint effusion, lung nodules,

pneumothorax, and joint dislocation.”

Source: Can artificial intelligence pass the fellowship of the Royal College of Radiologists examination? Multi-reader diagnostic accuracy study BMJ DOI: 10.1136/bmj‑2022‑072826