This is the second part of a two-part series. Read part one here.

What is the magnitude of the data fraud polluting scientific research, and what are its sources?

When dozens, hundreds, or thousands of studies address a scientific question or controversy, there will invariably be differing conclusions, if for no other reason than chance or the use of conflicting data. So, how are such research controversies adjudicated and resolved? An approach favored by the scientific establishment is to conduct what’s called a meta-analysis, a statistical analysis that combines the results of multiple scientific studies, often including randomized controlled trials. It’s believed by many researchers that systematically combining qualitative and quantitative data from multiple studies, sometimes numbering in the hundreds, is statistically stronger than any single study, turning noise into signal.

An example: resolving the debate over the nutritional superiority of organic foods

Often, the process works. For years, according to accepted wisdom, organic food was believed to be healthier than conventional alternatives. Some individual studies suggest that organic food grown organically is more nutritious, carries fewer health risks, and can reduce the risk of pesticide exposure. But many health professionals had their doubts.

The issue intrigued two prominent researchers at Stanford University’s Center for Health Policy, Dena Bravata and Crystal Smith-Spangler. Bravata, the chief medical officer at the health-care transparency company Castlight Health, launched the project, she said, because so many of her patients asked her whether organic foods were worth the higher prices - often double or more than the cost of conventional alternatives.

Bravata discovered that she couldn’t look to individual studies to resolve the controversy. There were literally thousands of them, each with its own data set and often reaching contradictory conclusions. Which ones were most trustworthy?

“This was a ripe area in which to do a systematic review,” said first author Smith-Spangler, who partnered with Bravata and other Stanford colleagues to conduct a meta-analysis.

According to the lead authors, they sifted through thousands of papers to identify 237 of what they believed were most relevant to include in the analysis. Their conclusion shocked and rocked the food industry.

“Some believe that organic food is always healthier and more nutritious,” said co-author Smith-Spangler. “We were a little surprised that we didn’t find that.” Most notably, although they found that organic food contained fewer added pesticides, they also found that the levels in all foods fell within the allowable safety limits.

The Stanford study’s conclusion touched off a tempest, with angry pushback from acolytes and self-interested elements of the organic industry, such as food writers Michael Pollan and Mark Bittman.

But numerous independent subsequent meta-analyses (not funded by the organic or conventional food industries) have not conflicted with the Stanford study’s conclusions. The most convincing: A follow-up report in 2020 based on 35 studies distilled from 4,329 potential articles by researchers at Australia’s Southern Cross University concluded that the “current evidence base does not allow a definitive statement on the health benefits of organic dietary intake."

What can go wrong?

Ironically, meta-analyses often can create the kind of misinformation they were designed to combat.

How are meta-analyses executed? A computer search finds published articles that address a particular question — say, whether taking large amounts of vitamin C prevents colds. From those studies considered methodologically sound, the data are consolidated and carried over to the meta-analysis. (Usually, the person(s) performing the meta-analysis does not have access to the raw data used in the individual studies, so summary statistics from each individual study are carried over to the meta-analysis.) If the weight of evidence, based on a very stylized analysis, favors the claim, it is determined to be accurate and often canonized.

The problem is that there may not be safety in numbers because many individual papers included in the analysis could very well be exaggerated or wrong, the result of publication bias and p-hacking (the inappropriate manipulation of data analysis to enable a favored result to be presented as statistically significant).

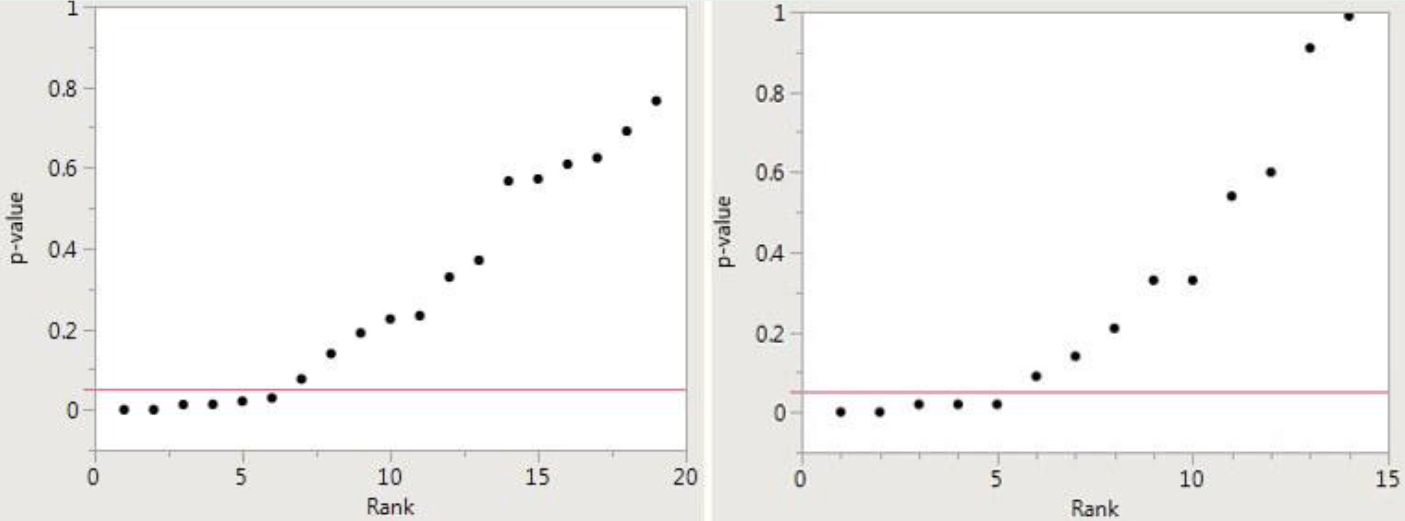

Potential p-hacking can be detected by creating a “p-value plot” — how likely any observed difference between groups is due to chance. For example, the figure on the left below plots a meta-analysis in which there were 19 papers; in the figure on the right, the meta-analysis included 14 papers.

Recall that a small p-value is taken to mean that an effect is real, as opposed to having occurred by chance. The smaller the p-value, the more likely the effect is real. If the resulting p-value plot looks like a hockey stick, with small p-values on the blade and larger p-values on the handle (as in the two figures below), there is a case to be made for p-hacking.

The figures above are derived from meta-analyses of the supposedly beneficial effects of omega-3 fatty acids (left figure) and the alleged direct relationship between sulfur dioxide in the air and mortality (right). They were presented in a major medical journal and purportedly showed a positive effect. But that conclusion is, at best, dubious.

The p-values are represented along the vertical in each graph. There are several small p-values reported below 0.05; taken alone, they would indicate a real effect. However, there are more p-values greater than 0.05, which indicates that the claimed effect was a mirage.

Clearly, both cannot be correct since there are more negative studies. P-hacking is a logical explanation for the presence of a small number of low p-values. The most likely conclusion is that these meta-analyses yielded false-positive results and that there is no effect.

Challenge to the science research community

A news article in the journal Nature recently addressed the data on research fraud. The breadth of the misconduct identified by the author, the features editor at the magazine, is shocking, both in terms of its magnitude and how successfully fraud has been institutionalized and commercialized:

The scientific literature is polluted with fake manuscripts churned out by paper mills — businesses that sell bogus work and authorships to researchers who need journal publications for their CVs. But just how large is this paper-mill problem?

An unpublished analysis shared with Nature suggests that over the past two decades, more than 400,000 research articles have been published that show strong textual similarities to known studies produced by paper mills. Around 70,000 of these were published last year alone (see ‘The paper-mill problem’). The analysis estimates that 1.5–2% of all scientific papers published in 2022 closely resemble paper-mill works. Among biology and medicine papers, the rate rises to 3%.

Thus, a substantial percentage of published science and the canonized claims resulting from it are likely wrong, sending researchers chasing false leads. Without research integrity, we don’t know what we know. Therefore, it is incumbent on the scientific community — researchers, journal editors, universities, and funders — to find solutions.

Henry I. Miller, a physician and molecular biologist, is the Glenn Swogger Distinguished Fellow at the American Council on Science and Health. He was the founding director of the FDA’s Office of Biotechnology. Find Henry on X @HenryIMiller

Dr. S. Stanley Young is a statistician who has worked at pharmaceutical companies and the National Institute of Statistical Sciences on questions of applied statistics. He is an adjunct professor at several universities and a member of the EPA’s Science Advisory Board.