Well, this is certainly interesting. An article from CNBC that I posted on my personal Facebook page has been flagged by Facebook fact-checkers as containing "partly false information."

As a professional fake news and junk science debunker, I am not used to being on the receiving end of debunkery. Besides, as my colleague Dr. Chuck Dinerstein is fond of saying, "I'm often wrong, but never in doubt." The unwritten but widely accepted 11th Commandment is, "Thou shalt not debunk the junk science debunker." Yet, here we are.

What makes this incident intriguing is that, when I posted the article, the information contained within it was absolutely true. An official at the World Health Organization really did say that "truly asymptomatic" transmission of coronavirus was "very rare," and this bombshell was reported by CNBC. The next day, however, the WHO retracted the comment.

So, this leads to the following dilemma: Should Facebook be in the business of "debunking" news and scientific reports when events are rapidly changing? What's true one day may be thought false the next day... and then treated as true again a week later. Furthermore, does Facebook have the expertise to do this? If experts can't even agree on what is true about COVID-19, why should Facebook think of itself as the ultimate arbiter of truth?

Facebook Fact-Checkers Are Doing the Right Thing, But There Is Danger

Having said all that, I think Facebook is doing the right thing. In this particular case, the WHO official miscommunicated, and the organization issued a clarification. So, I think Facebook is on solid ground to flag that former statement as "false." Even better, they provided a mini-explanation, which could be clicked for further information. Here's the mini-explainer:

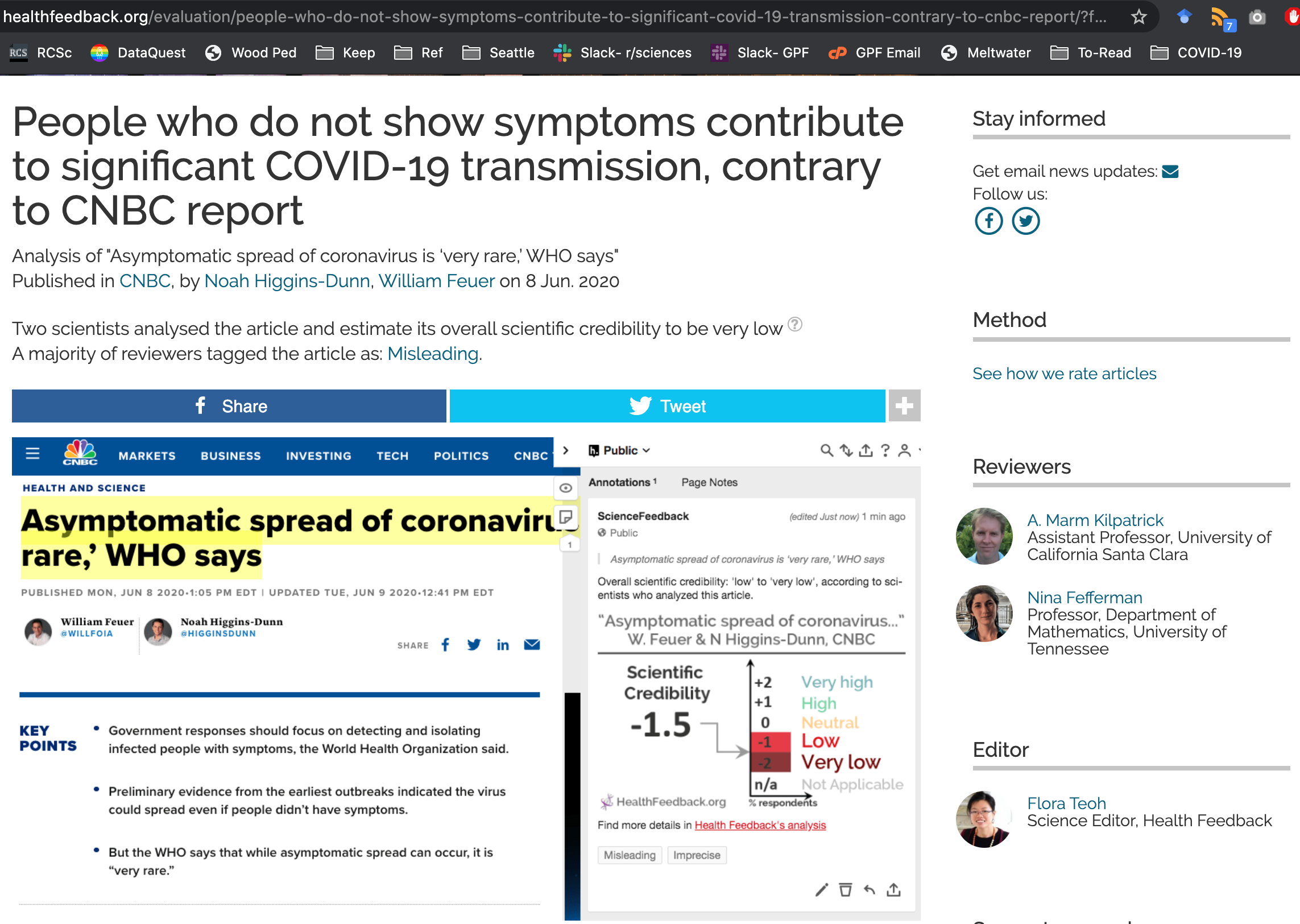

When I clicked on this, I was redirected to an article on a website called HealthFeedback.org, whose mission is "sorting fact from fiction in health and medical media coverage." The CNBC article was fact-checked by two professors, one of whom noted, "This is more the fault of the WHO than the reporting, but the reporting about the WHO conclusion misinterprets the WHO findings."

On the whole, Facebook did a good job here. But danger lurks.

Who Fact-Checks the Fact-Checkers?

Fact-checking is neither as easy nor as straightforward as it might seem.

Consider the following statement: The #1 problem in the world is poverty. I believe that statement is true, but many others do not. The statement involves both a fact-based acknowledgment of reality (i.e., a substantial amount of poverty really does exist) as well as an opinion about the magnitude of the problem compared to other threats. People cannot dispute the former but are welcome to disagree with the latter. So, is the statement true or false? Well, it's impossible to say. It depends on your priorities and values.

There are other problems with fact-checking. It is unfortunately susceptible to political bias, just like all other forms of news reporting and analysis. Matt Shapiro at the Paradox Project convincingly demonstrates that PolitiFact (the site that famously uses "pants on fire" as one of its ratings) displays a bias in its fact-checking.

Additionally, how can a fact-checker accurately discern truth from fiction in a fast-moving situation, such as a riot or a war? The term "fog of war" refers to the inherent uncertainty of information coming out of the battlefield. The scientific enterprise itself is sort of a battlefield, too, just a slow-motion one. An article published one day might be challenged by another article published a month (or a year or years) later. Which article is "wrong"? And do fact-checkers or social media giants like Facebook really have the expertise to determine that?

There are no easy solutions. On the whole, more skepticism and fact-checking are better than less. But, they're not a panacea.