Several years ago, questions were rightfully raised over an apparent racial bias in the calculation of an individual's kidney function, the creatinine clearance. Creatinine is a waste product of our diet, and muscle wear and tear, and the kidneys filter out this waste product, so its presence can be used as a measure of the kidney's filtering function. In developing the algorithm, the higher levels of creatinine in Black/African Americans were ascribed to their "greater muscle mass," diet, and some biological difference in their kidney function. This was wrong and resulted in false negatives and underdiagnosed kidney disease in these individuals. This has prompted a review of the use of race, "a social construct," in medicine. A new study argues that not including race in algorithms can be just as detrimental as its inclusion.

“The inclusion of race and ethnicity in clinical decision-making has been highly scrutinized because of concerns over racial profiling and the subsequent unequal treatment that may be a result of judging an individual’s risks differentially based on differences in averages in the racial and ethnic groups to which they belong.”

The study appeared in JAMA’s Network Open; the predictive model, the risk of colorectal cancer (CRC) recurrence. The value of such a predictive model is that it can personalize postoperative surveillance, reducing the need for unnecessary tests while hopefully maximizing the benefit of identifying recurrent disease as early as possible. One more recently developed model used race as a variable since African Americans are disproportionately impacted, with a higher incidence (>20%) than White Americans. The researchers asked how this model’s predictive value changed with and without the use of race as one of its variables.

To get an answer, they used Southern California’s Kaiser Permanente’s patient care registry, cross-linking the data on race with adults diagnosed with CRC having undergone surgery between 2008 and 2013 and followed until 2018 – a reasonable period to look at the model’s ability to discern those at risk for recurrence. They compared the model's predictive value to the known outcomes of 4230 patients, roughly half female, mean age of 65. The racial mix reflected SoCal with about 12% Asian, Hawaiian, or Pacific Islander, 13% Black or African American, 22% Hispanic, and 53% non-Hispanic White.

They considered four variations of the model.

- Race-neutral – race was excluded

- Race-sensitive – race was included as well as a variable that considered racial differences in recurrences based upon a patient’s initial staging

- Race-stratified – separate models for each racial group.

- Interactive – race interacted statistically with all the other variables

“There is no single accepted criterion for evaluating algorithmic fairness.”

The outcome of interest was a statistical measure, “algorithm fairness.” This is a new area of research and concern focusing on both mathematical and ethical approaches to fairness – do a model’s incorrect predictions “systematically disadvantage” one group or another? The researchers looked at each model’s equality of:

- Calibration – How well do the model’s results reflect reality.

- Discriminative ability – How accurately does the model discriminate between outcomes.

- False Positives and Negatives – How many and what type of errors are made by the model.

- Positive and Negative Predictive values – A variation of false positive and negative errors; these metrics consider how well the model does or does not predict a specific event, in this case, CRC.

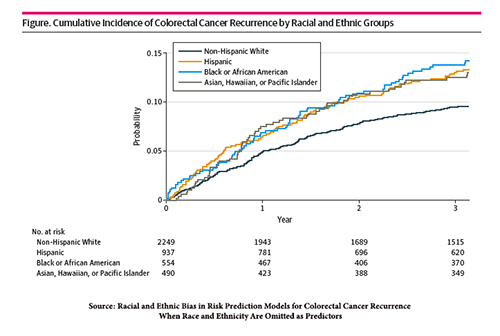

The graphic displays the incidence of CRC recurrence and its racial disparity.

The graphic displays the incidence of CRC recurrence and its racial disparity.

The calibration of the models was very good, reflecting clinical reality – the race-sensitive model differed the least between racial groups. The overall discriminative ability overall, separating those with and without recurrences, was a statistical value generally considered "acceptable," the race-sensitive model again being the one differing the least between racial groups.

The race-neutral model had the lowest number of false positives, a result that would lead to less testing; the race-sensitive model had the most false positives, resulting in more unnecessary tests. The effect on false negatives was a bit more complex. The race-sensitive model lessened false negatives, improving care, for all races, but non-Hispanic Whites, where the false negatives increased, which would result in "missed" patients of concern. The race-neutral model was the worst at positive and negative predictive values; the race-sensitive model's negative predictive value was better if you were Asian, Hawaiian, or Pacific Islander.

“We demonstrated in the CRC recurrence setting that the simple removal of the race and ethnicity variable as a predictor could lead to higher racial bias in model accuracy and more inaccurate estimation of risk for racial and ethnic minority groups, which could potentially induce disparities by recommending inadequate or inappropriate surveillance and follow-up care disproportionately more often to patients of minoritized racial and ethnic groups.”

The initial conclusion tells us that using or not using race as a variable does not inherently create bias – as with many things, it depends. Race is a variable that contains additional baggage; it includes information on education, income, and exposure to risk factors, among other social determinants of health. Moreover, those other determinants will vary with the group you consider; racial groups embedded in poverty will be different from the same racial groups living in wealth. Even the definition of race can make a difference. African Americans have an overall incidence of CRC of about 60/100,000, and South African Blacks 5/100,000. As the authors write,

“There is no one-size fits all solution to designing equitable algorithms.”

This evidence-based observation should make us all reconsider the hype and rush to bring AI algorithms to healthcare. The researchers end by mentioning the trade-off between the fairness of statistics that they measured and ethical fairness. Fairness in treatment “posits that all individuals with similar clinical characteristics should be offered the same care regardless of their race and ethnicity." Fairness in outcome requires “disadvantaged group should be given more resources to achieve similar health outcomes as the advantaged group.”

Algorithmic medical care may standardize treatment, promoting fairness of treatment, but there is no guarantee that algorithms will raise everyone rather than lower everyone to achieve its standard. It is hubris to believe that adding or subtracting a variable from a model will promote fairness in treatment, let alone in outcome.

Source: Racial and Ethnic Bias in Risk Prediction Models for Colorectal Cancer Recurrence When Race and Ethnicity Are Omitted as Predictors JAMA Network Open DOI: 10.1001/jamanetworkopen.2023.18495