“The coelacanth has perfected life in the slow lane in a different way. Almost uniquely among animals, their normal position is head down, tail up, perfectly vertical and still.

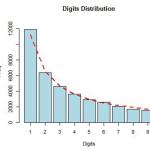

statistics

Let's pretend that you're a dishonest scientist who wants to fabricate some data for an experiment. Let's further assume that you run an experiment five times and want the average answer to be "10".

Nate Silver is a statistician and self-appointed electoral guru.

The U.S. presidential election just came to a close, but the postmortem is just beginning. Both candidates will wonder why they didn't perform better, and pollsters will wonder the same.

Candidate Gobermouch is leading in the polls over Candidate Fopdoodle, 48% to 43%, a difference of 5 percentage points. The poll's margin of error is 3%. Does Gobermouch have a lead over Fopdoodle that is outside the margin of error?

Nine American tourists have died this year under mysterious circumstances in the Dominican Republic. Quite obviously, many are wondering if it's safe to visit the country.

By Kai Zhang, University of North Carolina at Chapel Hill

As the saying goes, "There are three kinds of lies: lies, damned lies, and statistics." We know that's true because statisticians themselves just said so.

Neil Sedaka once noted that breaking up is hard to do. Even harder, it seems, is doing good science. Why?

It's difficult to imagine what life must be like for people who work in public relations at United Airlines.