The U.S. presidential election just came to a close, but the postmortem is just beginning. Both candidates will wonder why they didn't perform better, and pollsters will wonder the same. Indeed, it seems like polling firms learned little since 2016.

To assess the accuracy of polling, let's rely on the polling averages as calculated by RealClearPolitics. (Full disclosure: I proudly worked for RCP from 2010 to 2016.) The site's methodology is straightforward: An arithmetic average of the most recent polls is calculated, and these are used to predict the outcome in each state and the overall Electoral College vote. RCP does not conduct any polls itself; it simply relies on polls produced by other outlets.

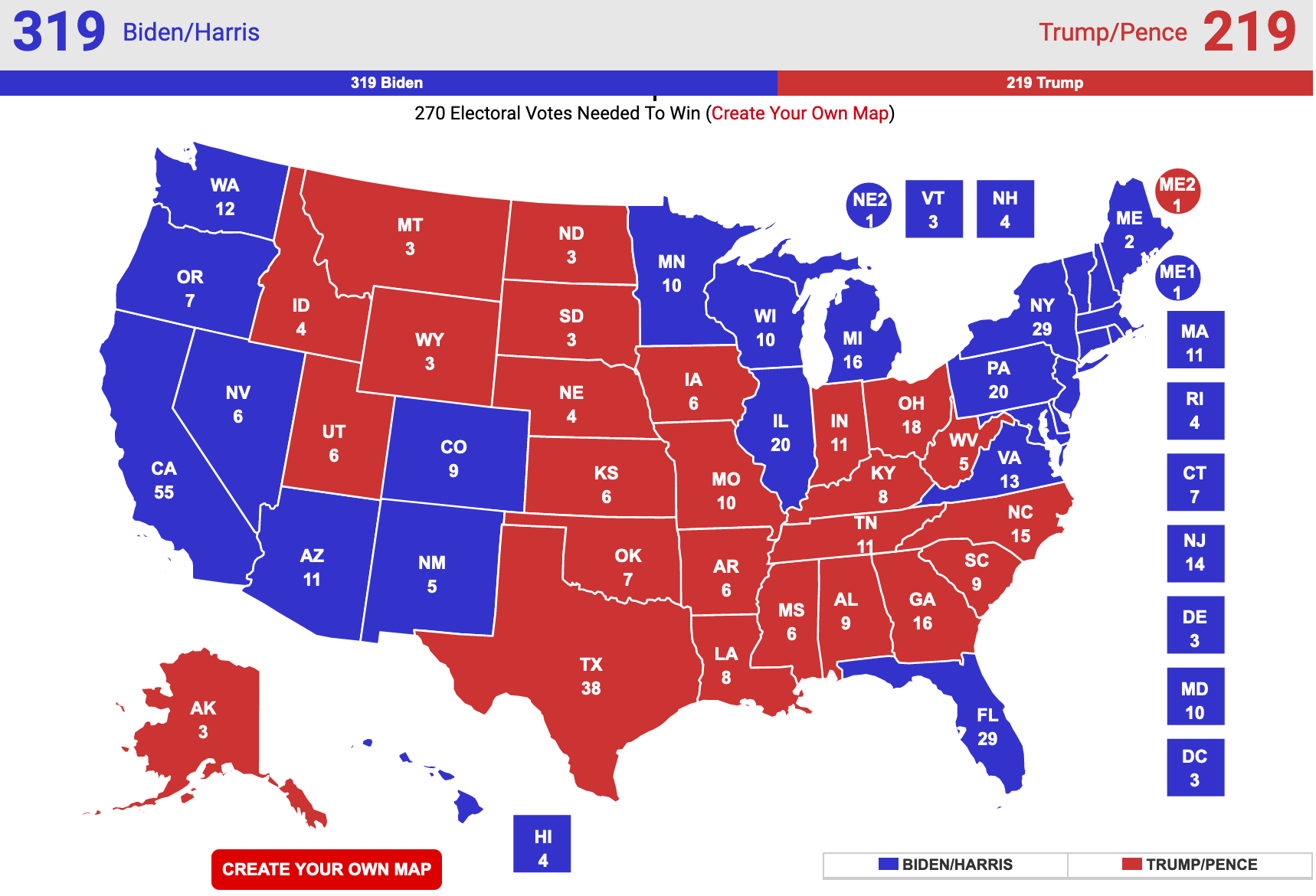

At first glance, pollsters appear to have done quite well. Here's the final Electoral College prediction from RCP:

This map is pretty close to reality. The pollsters got Florida wrong and possibly Pennsylvania wrong as well, but if current results hold, then the pollsters predicted 48 of 50 states correctly.

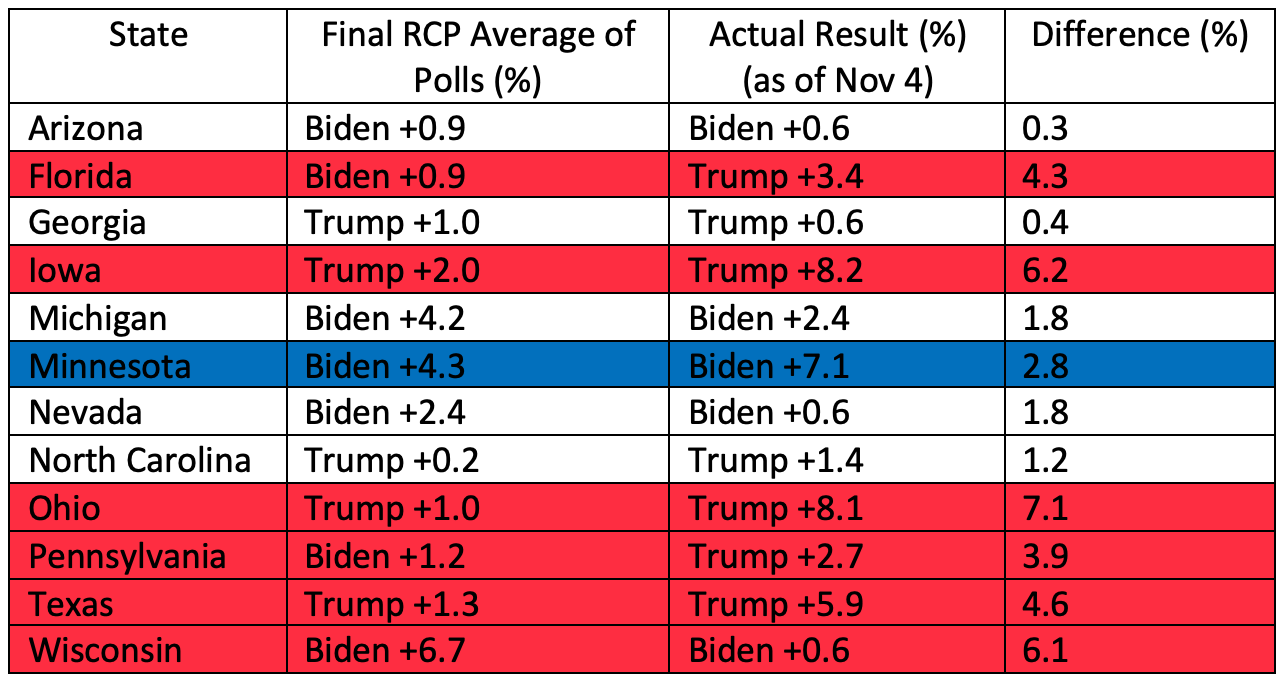

However, if you dig a little deeper, you'll see that the pollsters actually did quite poorly. To demonstrate why, let's compare the final predictions for the battleground states with the current results. (See chart below.)

Highlighted in red are the states in which the pollsters substantially under-predicted the support for Donald Trump. Pollsters were off by a whopping 7 percentage points in Ohio. In all the major battleground states except for one (Minnesota), the polls were systematically biased against Mr. Trump. Further, the polls under-predicted the performance of the Republican Party as a whole, which held the U.S. Senate and gained seats in the House, contrary to expectations.

There's Nothing Scientific About a Poll

Why? Well, that's hard to say. The President and his supporters will claim media bias. But there are better explanations. Perhaps Trump's supporters are hesitant to admit their voting intention to a pollster. Or perhaps American pollsters aren't as good as we thought. Even though the media uses the term "scientific poll," there's nothing scientific about a poll. Polling involves statistics and a heaping spoonful of art and intuition. It's more akin to a chef tweaking a recipe than a scientist refining an experiment. For instance, if assumptions about demographics or turnout are incorrect, the poll could be worthless. If sampling methods are inadequate, the poll could be worthless.

Does it matter? Yes. The entire point of a poll is to determine what the American public thinks about a particular topic. As David Graham writes for The Atlantic, "If polling doesn't work, then we are flying blind" when it comes to public opinion and policy. Pollsters better figure this out, and quickly.