In the late 90's, Michael J. Fox starred in a television comedy called Spin City. As its name implies, the job of Fox's character was to "spin" the truth to make his boss (the mayor of New York City) look good.

We've come to expect this sort of behavior from politicians and their sycophants on cable news, but we don't expect it from scientists. Yet, a new paper published in PLoS Biology suggests that some scientists do just that.

Sensationalizing Science

The biggest purveyors of sensationalism are university press offices and the scientifically ignorant dupes in the media who eagerly reprint press releases, sometimes nearly verbatim. Environmentalists and other activists have also perfected the art of spin.

But this paper isn't about them; instead, it focuses on the research literature itself. And the authors found that scientists are not above hyping the data to make themselves look good.

The researchers, based at the University of Sydney, performed a systematic review of 35 papers, seven of which were of sufficient quality to perform a quantitative meta-analysis. (The 35 papers, which themselves examined the prevalence of spin in the literature, had to fit certain criteria to be included.) In general, "spin" is defined as conclusions that are not supported by the data, particularly if those conclusions are favorable to the authors. Also, papers that improperly imply causation are considered to contain spin.

The authors found that every category of study analyzed contained spin: Diagnostic accuracy studies, observational studies, and clinical trials. Even systematic reviews and meta-analyses themselves contained spin, despite the fact that these studies are designed (in part) to eliminate spin. Prevalence of spin was roughly 30% to 60%. (The authors note that all 10 papers examined on a defibrilator clinical trial showed spin, for a prevalence in this small sample of 100%.)

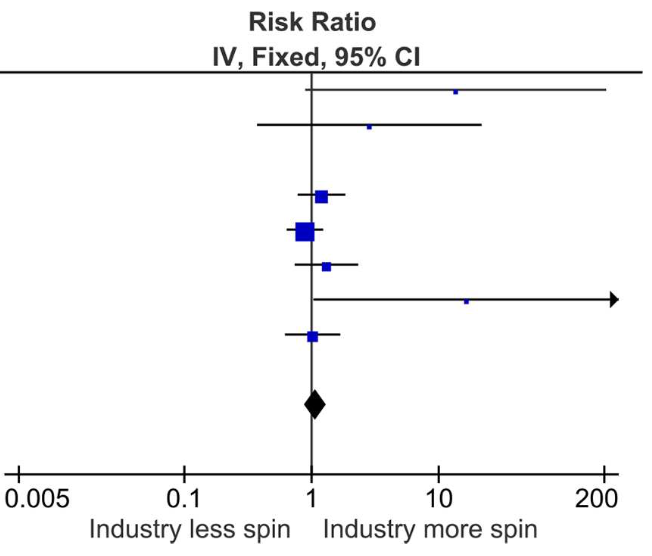

Interestingly, the authors found that studies that were funded by industry were no likelier to have spin than studies that were not funded by industry. (See figure. The "1" on the X-axis signifies no statistical significance. The diamond represents the combination of all the data from the seven studies.)

Interestingly, the authors found that studies that were funded by industry were no likelier to have spin than studies that were not funded by industry. (See figure. The "1" on the X-axis signifies no statistical significance. The diamond represents the combination of all the data from the seven studies.)

Limitations

There are at least two major limitations to the study. First, "spin" was defined differently in the papers included in the systematic review and meta-analysis and, therefore, the concept is subjective rather than objective.

Second, the authors are not in a position to identify the root causes of spin. Instead of a willful intent to deceive or exaggerate, spin could be the result of a simple misunderstanding of statistics. Indeed, scientists are not statisticians, and it is not uncommon for scientists to draw unwarranted conclusions from their experiments, simply because they fail to appreciate the nuances of the statistical tests they employ.

Thus, intellectually honest people may come to different conclusions about whether a scientist is spinning his results. What might appear as spin to one scientist simply may be a sincerely held but mistaken belief to another.

Still, the authors' data remind us of something that is all too often forgotten: Scientists are human, too, and they are flawed, just like everybody else. Just because a paper got published does not mean it is entirely accurate.

Source: Chiu K, Grundy Q, Bero L. "'Spin' in published biomedical literature: A methodological systematic review." PLoS Biol 15(9): e2002173. Published: 11-Sept-2017. DOI: 10.1371/journal.pbio.2002173