We cherish the myth of the "aha" moment, Edison and the lightbulb or Newton, his apple and gravity, but study and reflection suggest that ideas "are not born as breakthroughs—they need the attention and revision of a community of scientists to be developed into transformative discoveries." Two economists suggest that how we measure the work of scientists and their subsequent rewarded and punished may contribute to what is feared to be the "stagnation" of scientific discovery and innovation.

A model of scientific production – an idea’s lifecycle

New, and perhaps "good" ideas occur frequently, they come into our minds at little cost, but by themselves have no value. It is difficult to see the real usefulness of our daydreams, often it requires exploration, "playing around" to discover useful insights and properties. And to be honest, much of this playing around culminates in nothing, that is why we might characterize it as play. Just like "overnight sensations" who worked in obscurity for 20 years, transformative science is built upon early tentative work; it rarely springs from an area not explored to some degree already.

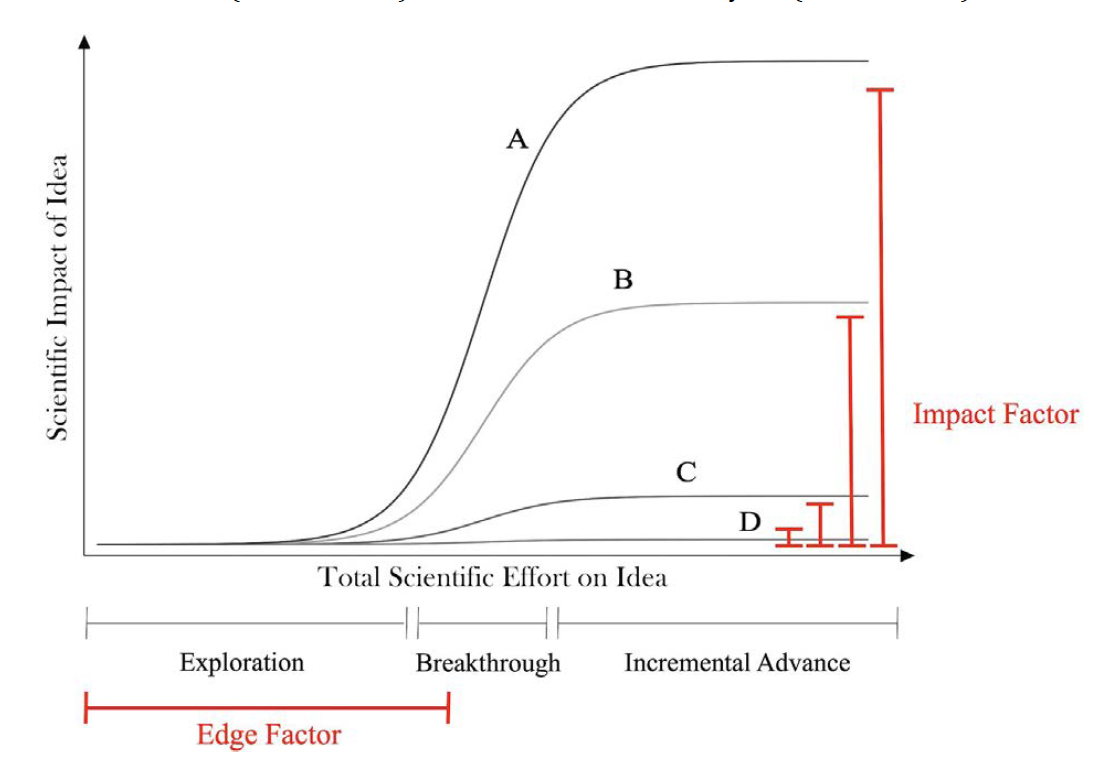

This is the period of exploration as a group of scientists explore an idea's properties and usefulness, a period the researchers called "edge science" – working at the frontier of scientific knowledge. Much of the knowledge gained will never make it to the "literature," because much is negative results. Overall exploration is an extended period, with little scientific impact serving as the springboard for the breakthrough that follows.

This is the period of exploration as a group of scientists explore an idea's properties and usefulness, a period the researchers called "edge science" – working at the frontier of scientific knowledge. Much of the knowledge gained will never make it to the "literature," because much is negative results. Overall exploration is an extended period, with little scientific impact serving as the springboard for the breakthrough that follows.

“Most scientific work with new ideas falls into this category, as most new ideas fail in the sense that they do not develop into breakthroughs. While early exploratory work is crucial for subsequent breakthroughs, it is rare for scientists to reward such work with many contemporaneous (or even delayed) citations.”

In the breakthrough period, a large group of researchers begins to find utility in this early exploratory work, and a study finally breaks through as a high-profile paper in a high-impact journal. The article starts a virtuous cycle where more scientists are recruited to explore this now popular, fruitful idea, and grants and funding following. In this exponential phase, there are more and more articles. [1]

In an idea's final phase, scientists continue to mine the field, honing the idea and generating lots of incremental papers. For scientists and funding agencies, the idea is a "sure bet," almost a guarantee of "advancing" science with less intellectual risk. Much later in its life, the idea may be such a foundational concept that it is absorbed into the scientific dogma.

Scientists respond to incentives just like you and me -the “Citation Revolution”

In the marketplace of ideas, how do we measure the work of a scientist? In the days before big data, the emphasis was on volume, "publish or perish." But when you reward volume, you get volume without consideration of quality. The citation factor was designed to improve the measure of a scientist's work – the value of a scientific paper was judged by how often others cited the work, how far and wide it's influence spread. In turn, in a competitive marketplace, journals sought to find and publish the papers that would be most cited. They, in turn, could be judged by their "impact factor," just how many mentioned and therefore impactful papers they published.

The digital revolution, especially the advances in search by Google Scholar and its companion academic search engines, have accelerated and emphasize these metrics of influence. What better input for a ranking algorithm than a citation number or impact factor or any combination of the two? Nor was the value of an "influence" metric lost on administrators and funders, who could use these quantifiable "performance indicators" to best identify influencers. These metrics are used in deciding tenure, promotion, and pay and have become the "coin of the realm" in many academic situations.

If we overlay citations on the lifecycle of an idea, we see that exploratory studies, that necessary incubation period has lots of unpublished negative results and citations are few and far between. The value of citations as a measure of influence is more often seen in the breakthrough phase when papers are heavily cited. Even when the idea has matured, incremental studies are being cited; only exploration is given short shrift. But it is the exploration that creates the shoulders to stand upon; without them, there are no breakthroughs

“As citations gained prominence in research evaluation, this tilted incentives in favor of incremental science at the expense of more innovative science.20 Increased competition for resources has further exacerbated these distortions.21It is thus not surprising that science has become more conservative in the decades that span the citation revolution: quantitative evidence from biomedicine shows that scientists are now less likely to try out new ideas in their work.”

Given this incentive system, are we surprised that most scientists study the nuances of the dogma, like a Talmudic scholar, rather than exploring career-risking new ideas? Influence is important, but it is not sufficient. The researchers suggest that scientific contribution is multidimensional, involving "volume, impact, and novelty."

“Even baseball players are evaluated on more dimensions than scientists.”

In the real world, most new ideas rarely become breakthroughs; they die in exploration. The graphic on the right  may more adequately visualize the lifecycle. Here A produces a breakthrough, like CRISPR, B produces an influential result, like combining two medications into one pill. C and D lead nowhere. It takes time to identify the good from the dead ends, and as the researchers write, "the designation 'giant' extends to all the scientists working in the exploration phase, whether they work on ideas A, B, C, or D."

may more adequately visualize the lifecycle. Here A produces a breakthrough, like CRISPR, B produces an influential result, like combining two medications into one pill. C and D lead nowhere. It takes time to identify the good from the dead ends, and as the researchers write, "the designation 'giant' extends to all the scientists working in the exploration phase, whether they work on ideas A, B, C, or D."

How do you measure exploration, the play involved in looking outside of the box, what the researchers previously described as "edge science?" Impact factors are not useful measures. The researchers do offer an initial metric of novelty, textual analysis. They argue that "new ideas in science often manifest themselves as new words and word sequences," and analyzing a paper's text, identifying ideas, and their age. As a result, one can identify studies that "advance relatively new ideas" and develop a measure of exploration – an edge factor.

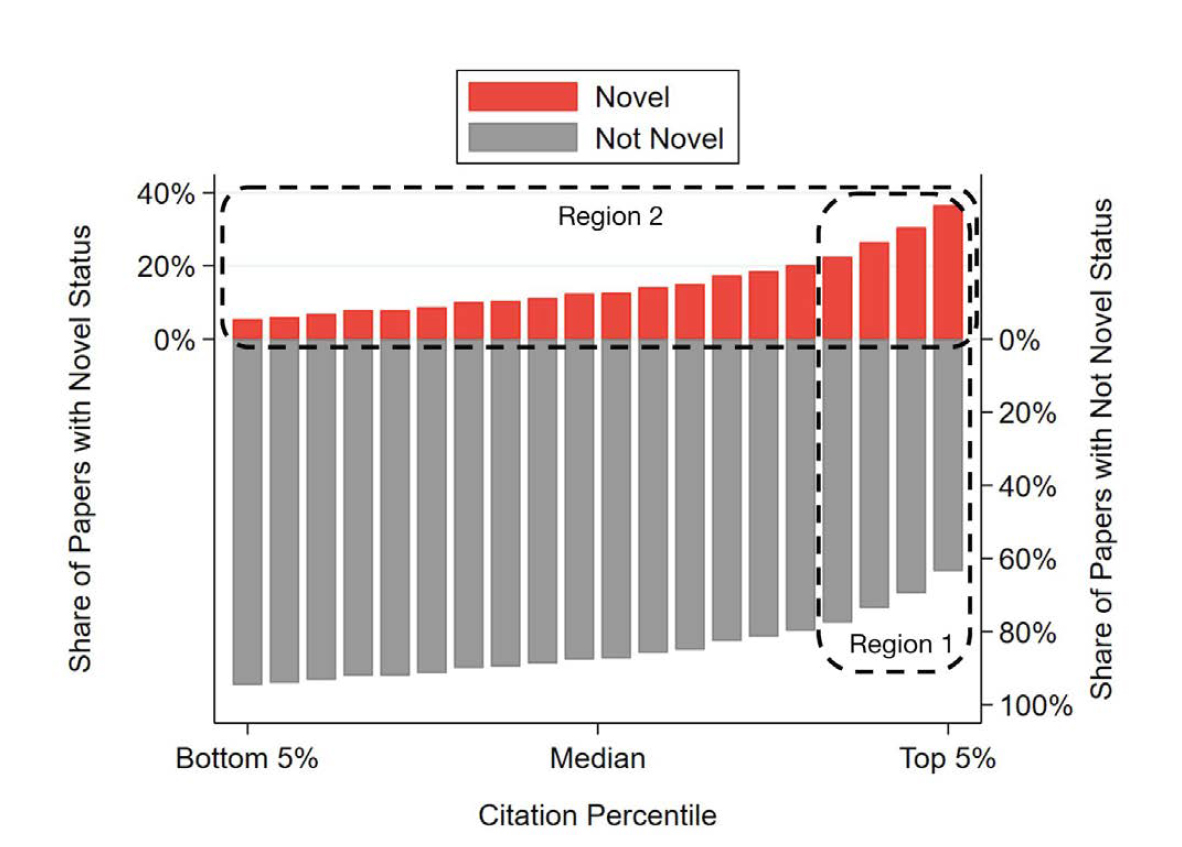

In prior work, they analyzed roughly 200,000 biomedical papers published in 2001, characterizing novelty and citations. As the graphic demonstrates, novelty and influence are distinct and require different measures.

In prior work, they analyzed roughly 200,000 biomedical papers published in 2001, characterizing novelty and citations. As the graphic demonstrates, novelty and influence are distinct and require different measures.

They argue that measures of novelty would reward play and reduce the career-risk of failing to find significant results in novel ideas. They are also astute enough to recognize that a new metric will also bring new ways to "game" the system, where "scientists and journals may be tempted merely to mention new ideas rather than actually incorporate them into their work." They anticipate that future textual analysis will identify the cheats. Is it possible that

"…by encouraging and rewarding exploration, changes like these could increase the tolerance for failure and make it considerably easier to establish new scientific communities that explore and develop new areas of investigation. The birth of such communities, and the debate and scientific play that they facilitate, is a key component of a fruitful scientific enterprise."

[1] As the researchers point out, this increased attention, and citation, does not necessarily equate to a paradigm shift as much as it is an advance, and it doesn't necessarily mean the progress is TRUE, witness the back and for over any nutritional component.

Source: Stagnation and Scientific Incentives NBER Working Paper 26752