The apocryphal story about bias in artificial intelligence algorithms has to do with the difficulty of facial recognition [1]. A new study in Science demonstrates a biased healthcare algorithm, and it tells us quite a bit about the current state of AI in healthcare.

The study

Health systems and insurance companies used algorithms to identify patients in need of enhanced care – the sickest patients that use a “disproportionate” share of resources. They are often noted as the 5% of patients utilizing 20% of care. The goal of the AI is to identify these individuals and get them the help they need, and in the process, improve outcomes and reduced cost – the value proposition.

The researchers looked at one commercially available, well-accepted AI algorithm identifying these “special-needs” patients. The algorithm has an absolute cut-off, where patients are directly referred for enhanced services. There is a secondary cut-off, describing a grey area, where the patient is referred to their physician to determine if they have special needs. Although race was not a variable, the researchers found, using a “large hospital’s” patients that the referrals were racially biased – black Americans were less frequently identified as needing greater care than white Americans with the same number of chronic conditions. Having that human back-up didn’t rectify the situation. While the algorithm identified 17.7% of black patients in need, if the bias were eliminated, the figure would rise to 46.5% - quite a difference.

The source of bias

“It kind of arose in a subtle way. When you look at the literature, “sickness” could be defined by the health care we provide you. In other words, you’re sicker the more dollars we spend on you. The other way we could define sickness is physiologically, with things like high blood pressure.”

Sendhil Mullainathan [2]

It turns out that the algorithm was trained on dollars spent rather than on the underlying physiology. There are many ways to identify special healthcare needs. As a clinician, I would look at those chronic conditions and weigh certain combinations more heavily or biomarkers, like HbA1c or LDL, the bad cholesterol. The developers of the algorithm, insurance companies in the risk stratification business, chose cost. Their equation was less well, more care, more cost. Except that for blacks, less well does not automatically translate into more care and downstream more cost. The linkage between how ill one is, and the amount of care they receive is subject to all sorts of bias; lack of education, inability to get to a physician’s office, trust - a range of sociodemographic issues. Health care costs are similar to health care needs, but they are not the same.

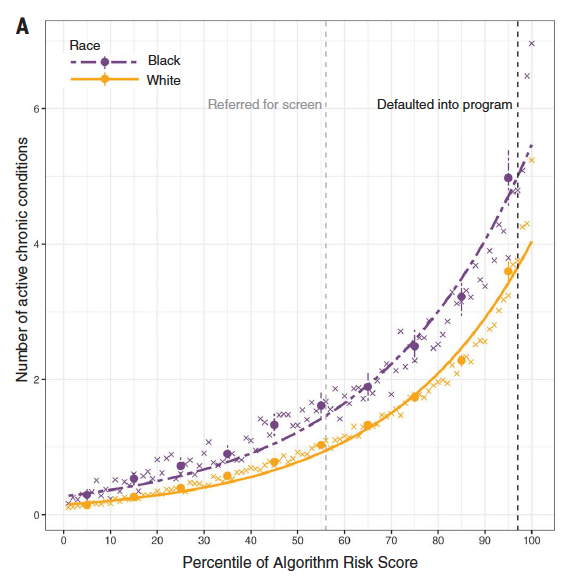

In this case, the bean counters chose the outcome of interest, money. Because AI is the ultimate in training for the test, the results were spot on in identifying high-cost patients; it just didn’t identify undertreated high-risk patients – and they were disproportionately black. [3] Here is one graph from the study showing the number of active chronic conditions and the points at which the algorithm makes a direct referral (97th percentile) or refers the case to the primary physician (55th percentile) It is clear that Black patients need more chronic illness than white patients to achieve the same algorithmic score.

In this case, the bean counters chose the outcome of interest, money. Because AI is the ultimate in training for the test, the results were spot on in identifying high-cost patients; it just didn’t identify undertreated high-risk patients – and they were disproportionately black. [3] Here is one graph from the study showing the number of active chronic conditions and the points at which the algorithm makes a direct referral (97th percentile) or refers the case to the primary physician (55th percentile) It is clear that Black patients need more chronic illness than white patients to achieve the same algorithmic score.

Points to Ponder

This study illustrates so many issues. First, had do we translate something as ill-defined as needing extra-care into variables we can measure – what data science is calling “problem formulation.” We can see clearly from this study that cost is not the best variable, at least when you are seeking to identify the severity of illness. The same is true for a variety of other algorithmic predictors. I may be able to identify the risk of death or complications, but neither is the measure of what the patient needs to know, will they have their health restored.

Second, where is the due diligence on the part of the algorithm designers and users. When two Boeing Max 737’s fell from the sky, there was rightfully a national furor. It continues to be investigated to this day, and it seems that both Boeing and the pilots (user error) will be found culpable. But this study, which impacts the health outcomes of millions of patients, possibly shortening their lives or diminishing its quality, barely goes noticed. And unlike those airplanes, there is no Transportation and Safety Board to investigate, the FDA cannot and does not hold anyone accountable. Should we settle for a “me-bad” from a commercial enterprise that charges a lot of money for that algorithm? Should the hospitals (the user) be held to account for not understanding how the algorithm would impact their patients?

For those pointing to the fail-self of physician screening, more bad news. The physicians did refer some of those grey-area patients. Still, they were not nearly as effective in identifying high-risk patients as the algorithm when it was directed to look at medical problems rather than medical costs. If you look at the history of algorithmic flight, the Boeing case stands out as one of the few times the algorithm is at fault; it usually is pilot error. Similarly, you can expect that those invested in these AI approaches will be pointing fingers at those physician users who were the human last-line of defense.

Finally, there is this.

“Building on these results, we are establishing an ongoing(unpaid) collaboration to convert …into a better, scaled predictor of multidimensional health measures, with the goal of rolling these improvements out in a future round of algorithm development.”

The study was supported by a $55,000 grant from the National Institute for Health Care Management- a nonprofit founded by “a group of forward-thinking health plan CEOs.” This is an industry-funded study, but with a result, that doesn’t raise a conflict of interest concern. But why point out that the ongoing collaboration is unpaid? Does this make the author’s intentions purer? Or is it a kind of corporate welfare, where the work of 4 academics is then turned into a commercial enterprise? It certainly explains why the algorithm’s developer was not identified.

[1] You can find the story in many places, including here.

[2] Quote from Stat

[3] I would suspect that it would also under-identify the poor or any group with obstacles to healthcare access.

Source: Dissecting racial bias in an algorithm used to manage the health of populations Science DOI: 10.1126/science.aax2342